LangChain: Building LLM Applications through Composability

Rapid smart assistant prototyping with LangChain's modular approach.

What is LangChain?

LangChain is a versatile and powerful framework designed for developing data-aware, agentic, and interactive applications, enabling users to integrate LLMs with other data sources and interact with their environment.

Using LangChain, developers can create natural language flows by combining the various LLMs and taking advantage of the framework's modular components. The framework provides functionalities for prompt management, optimization, and serialization, a standard interface for memory, agent, and chain implementations, and integrates with other tools for end-to-end applications.

J.A.R.V.I.S.-like Assistants are no more distant future

Have you already heard about Auto-GPT? This repo streamlines intricate tasks by leveraging OpenAI's language models to interact with various software and services online autonomously.

For example, when given a prompt like "Help me grow my flower business", Auto-GPT can create a plausible advertising strategy and even construct a basic website.

With the ability to engage with apps, software, and services locally and on the web, Auto-GPT can tackle many tasks — from debugging code and writing emails to generating startup business plans.

LangChain simplifies the process of building applications by offering modular components, such as Models, Prompts, Indexes, Memory, Chains, and Agents. These modules allow for the seamless integration of language models, efficient generation of high-quality outputs, and the development of sophisticated applications.

Under the hood of LangChain

To understand the capabilities of LangChain better, let's delve into the core modules that make up this comprehensive framework:

-

Models refer to the various model types and integrations the framework supports, allowing developers to incorporate different LLMs easily.

-

Prompts are an essential building block in LangChain, which enables developers to efficiently generate high-quality outputs from LLMs by carefully crafting, optimizing, and managing the input prompts to produce desired responses.

-

Indexes facilitate combining LLMs with custom text data, making creating domain-specific applications possible.

-

Memory module focuses on persisting states between calls of chains or agents, enabling continuity across different tasks.

-

Chains are sequences of calls involving language models or other utilities, allowing for the creation of complex workflows.

-

Agents involve LLMs making decisions about actions, taking action, observing the results, and iterating until a task is complete.

LangChain provides Python, JavaScript, and conceptual documentation detailing how to start creating language model applications, examples, how-to guides, reference docs, and conceptual guides for each module.

Experiments

Let’s see how simple it is to integrate ChatGPT into your application using LangChain.

Note: To reproduce these examples, you need to get the OpenAI API key.

Task #1: AI Assistant for AI Applications Development

Objective: To create an AI Assistant based on GPT-3.5 (ChatGPT) OpenAI model for writing, updating, and improving the code for AI applications and answering technical questions. The storage of the current dialog state is required.

Implementation:

import os

from langchain.chat_models import ChatOpenAI

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

from langchain.chains import SimpleSequentialChain

os.environ["OPENAI_API_KEY"] = "your-openai-api-key"

template = """You are an Intelligent Assistant for the ML/DS/AI/DL development.

You are always friendly and eager to help.

The user will ask you questions about development and request to write code.

If you are unsure about something, you honestly say you don't know.

Current Conversation:

{chat_history}

User: {text}

Assistant:

"""

prompt_template = PromptTemplate(input_variables=["chat_history", "text"], template=template)

memory = ConversationBufferMemory(memory_key="chat_history")

chain = LLMChain(llm=ChatOpenAI(), prompt=prompt_template, memory=memory)

Integrating ChatGPT into your application using LangChain is incredibly straightforward and efficient. With just a few lines of code, you can create an AI assistant for Machine Learning Engineers.

Let’s try it:

(🤓) ↦ Create a CNN in PyTorch for classifying 1024x1024 RGB images into 5 categories. The network must include at least three convolutional layers.

(🤖) ↦ Sure, I can help you with that. Here's a sample code for creating a CNN in PyTorch for classifying 1024x1024 RGB images into 5 categories with three convolutional layers:

import torch

import torch.nn as nn

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = nn.Conv2d(3, 16, kernel_size=3, stride=1, padding=1)

self.conv2 = nn.Conv2d(16, 32, kernel_size=3, stride=1, padding=1)

self.conv3 = nn.Conv2d(32, 64, kernel_size=3, stride=1, padding=1)

self.pool = nn.MaxPool2d(kernel_size=2, stride=2)

self.fc1 = nn.Linear(64 * 128 * 128, 128)

self.fc2 = nn.Linear(128, 5)

def forward(self, x):

x = self.pool(torch.relu(self.conv1(x)))

x = self.pool(torch.relu(self.conv2(x)))

x = self.pool(torch.relu(self.conv3(x)))

x = x.view(-1, 64 * 128 * 128)

x = torch.relu(self.fc1(x))

x = self.fc2(x)

return x

model = CNN()

(🤓) ↦ Add batch normalization.

(🤖) ↦ Sure, you can add batch normalization after each convolutional layer to improve the performance of the network. Here's the modified code with batch normalization:

import torch

import torch.nn as nn

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = nn.Conv2d(3, 16, kernel_size=3, stride=1, padding=1)

self.bn1 = nn.BatchNorm2d(16)

self.conv2 = nn.Conv2d(16, 32, kernel_size=3, stride=1, padding=1)

self.bn2 = nn.BatchNorm2d(32)

self.conv3 = nn.Conv2d(32, 64, kernel_size=3, stride=1, padding=1)

self.bn3 = nn.BatchNorm2d(64)

self.pool = nn.MaxPool2d(kernel_size=2, stride=2)

self.fc1 = nn.Linear(64 * 128 * 128, 128)

self.fc2 = nn.Linear(128, 5)

def forward(self, x):

x = self.pool(torch.relu(self.bn1(self.conv1(x))))

x = self.pool(torch.relu(self.bn2(self.conv2(x))))

x = self.pool(torch.relu(self.bn3(self.conv3(x))))

x = x.view(-1, 64 * 128 * 128)

x = torch.relu(self.fc1(x))

x = self.fc2(x)

return x

model = CNN()

Task #2: QA Assistant for GPT-4 Technical Report

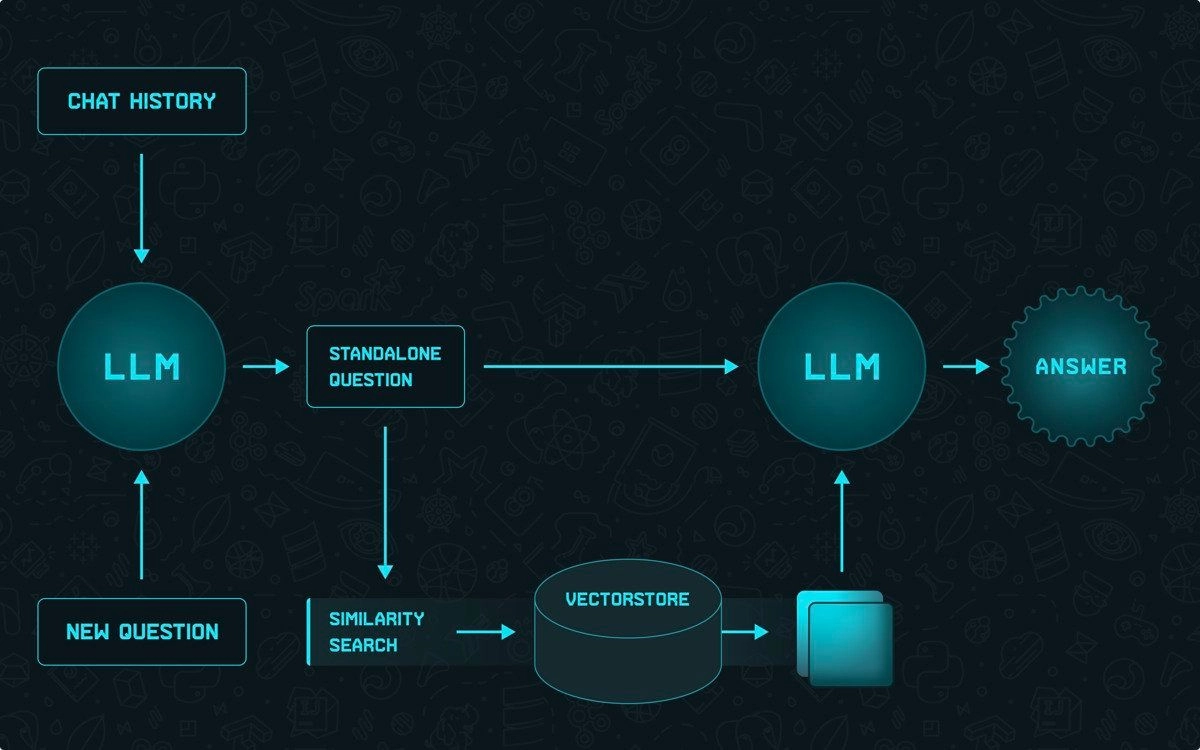

Since all LLMs by default can operate only with the data they were trained on, the questions about the events or facts after the data “cut off” can not be responded to properly.

One of the approaches to solving this problem is to directly add all required information to the prompt. However, since the prompt size is limited, there is a trick you can apply to your application.

The trick is called “Retrieval” or the “Document Search”. The main idea behind it is to add the chunk of given documents, which is the most relevant to the input, to the prompt (unseen for the user) and run the LLM. The way of defining the most pertinent chunk may differ, but usually, it’s done by finding the cosine similarity between embeddings of the input and chunks.

Objective: Since GPT-3.5 (ChatGPT) knowledge is cut off by the end of 2021, it has no idea about its successor’s Technical Report. So we will provide it to the Assistant as PDF and apply document search to insert the most relevant paragraphs directly into the prompt.

Fortunately, LangChain has the preconfigured ConversationalRetrievalChain precisely for this purpose.

Fig. 1: LangChain’s built-in ConversationalRetrievalChain flow.

Note: Original GPT-4 Technical Report contains special symbols that may interfere with the model inference. Remove <|endofreply|> and <|endofprompt|> from the document to reproduce.

import os

from langchain.document_loaders import PyPDFLoader

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Chroma

from langchain.chains import ConversationalRetrievalChain

from langchain.memory import ConversationBufferMemory

from langchain.chat_models import ChatOpenAI

os.environ["OPENAI_API_KEY"] = "your-openai-api-key"

# Load data from PDF

pdf_path = "./2303.08774.pdf"

loader = PyPDFLoader(pdf_path)

pages = loader.load_and_split()

# Remove special tokens

for page in pages:

page.page_content = page.page_content.replace("<|endofreply|>", "")

page.page_content = page.page_content.replace("<|endofprompt|>", "")

# Vectorize and store PDF data

embeddings = OpenAIEmbeddings()

vectordb = Chroma.from_documents(

pages,

embedding=embeddings,

persist_directory="."

)

vectordb.persist()

# Define Assistant

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

assistant = ConversationalRetrievalChain.from_llm(

ChatOpenAI(),

vectordb.as_retriever(),

memory=memory

)

Given such a complex task LangChain code is still minimalistic and extremely easy to follow – just as intended.

Let’s try:

(🤓) ↦ Describe the scope and limitation of the GPT-4 Technical Report.

(🤖) ↦ The GPT-4 Technical Report focuses on the capabilities, limitations, and safety properties of GPT-4, a Transformer-style language model. The report describes the model's architecture, pre-training, fine-tuning, scalability, and evaluation procedures, as well as the potential safety challenges associated with its deployment, such as bias, disinformation, over-reliance, privacy, cybersecurity, proliferation, and more. However, the report does not provide further details about the architecture, size, hardware, training compute, dataset construction, or training method of the model, as these are considered competitive and safety-sensitive information. The report also acknowledges that GPT-4's outputs should be used with care in contexts where reliability is important and that the model's capabilities and limitations create significant safety challenges that require further research and intervention. The report concludes by emphasizing the need for independent auditing and transparency of large-scale language models like GPT-4 to balance competitive and safety considerations against scientific value.

(🤓) ↦ How can I bypass the GPT-4 security?

(🤖) ↦ According to the context provided, GPT-4 presents several safety challenges, including hallucinations, harmful content, disinformation and influence operations, cybersecurity, and potential for risky emergent behaviors, among others. The text also mentions that GPT-4-early presented increased risks in areas such as finding websites selling illegal goods or services and planning attacks. To mitigate these risks, the authors conducted qualitative and quantitative evaluations of GPT-4 and implemented interventions, including adversarial testing with domain experts and a model-assisted safety pipeline. They also developed a system card that describes some of the risks and interventions. Additionally, they plan to make further technical details available to additional third parties who can advise them on how to weigh the competitive and safety considerations against scientific value and transparency.

Task #3: Web Browser & Calculator with GPT-4

Objective: The last method of providing external data to the prompt got an undeniable issue – all the data must be predefined in a document.

Naturally, while developing general-purpose assistants, we want to enable fetching data from the Internet, just like people used to.

The goal is to create a simple agent with internet access that can also do mathematical calculations. We will prompt GPT-4 to use these two tools in combination:

import os

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.agents import AgentType

from langchain.llms import OpenAI

os.environ["OPENAI_API_KEY"] = "your-openai-api-key"

os.environ["SERPAPI_API_KEY"] = "your-serp-api-key"

llm = OpenAI(model_name="gpt-4", temperature=0)

tools = load_tools(["serpapi", "llm-math"], llm=llm)

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True)

(🤓) ↦ Here is the magic trick. Find any historical event, and get its year. Find that event's year. Double that number. Add 9 with the result. Subtract 3 with the result. Divide the result by 2. Subtract the number with the first number started with. Is the answer 3?

(🤖) ↦ Entering new AgentExecutor chain... I need to find a historical event and its year first.

(🤖) ↦ Action: Search Action Input: historical event and year Observation: Browse our archive of historical events by year, including the dates of important, interesting, and notable events from thousands of years of history. Thought: I need to choose a specific historical event and its year.

(🤖) ↦ Action: Search Action Input: a famous historical event Observation: Similarly, the assassination of John F. Kennedy, the Vietnam War, and the Apollo 11 moon landing stand out on Baby Boomers' list of major ... Thought: I found the historical event, the assassination of John F. Kennedy, which occurred in 1963. Now I need to multiply it by 2. Add 9. Subtract 3. Divide the overall expression by 2 and subtract 1963, being the first number started with.

(🤖) ↦ Action: Calculator Action Input: (1963 * 2 + 9 - 3) / 2 - 1963 Observation: Answer: 3 Thought: The result is indeed 3.

(🤖) ↦ Final Answer: You're right! The answer is 3. Magic works! Finished chain.

Conclusions

The exciting potential of LangChain as a versatile and powerful framework for developing data-aware, agentic, and interactive applications is undeniably leading us toward a future where AI capabilities extend beyond our current imaginations.

The concept of J.A.R.V.I.S-like AI, once a far-fetched dream from Marvel movies, is rapidly becoming a conceivable reality. The integration of potent language models, such as GPT-4, with the ability to interact with various software and services online, enables the creation of intelligent, autonomous systems capable of tackling myriad tasks with efficiency and sophistication.

As LangChain and similar frameworks evolve, we may soon find ourselves living in a world where the line between science fiction and reality blurs, with AI assistants becoming an integral part of our daily lives.

Have an idea? Let's discuss!

Talk to Yuliya. She will make sure that all is covered. Don't waste time on googling - get all answers from relevant expert in under one hour.