Graph Neural Networks (GNN)

Overview of the state of Machine Learning on Graphs.

What is GNN?

Graph Neural Network (GNN) is a machine learning algorithm that operates on graph-structured data and provides a flexible framework for learning from graph data. The key idea is to represent nodes in the graph as vectors that capture the properties and relationships. These nodes are then updated based on their neighbors allowing gradients to flow through the graph.

The main reason behind GNNs is data. A huge amount of data is naturally represented as graphs, with nodes as entities and edges as relationships between the entities. Some examples of such data might be social networks, transportation networks, molecular structures, etc. GNNs outperform traditional machine learning algorithms on tasks that involve graph-structured data and have become an active area of research in the deep learning community.

Who proposed it, and when? What are the most famous GNN-based algorithms?

The first mention of the motivation behind GNNs can be traced back to the nineties to the works on Recursive Neural Networks that were first utilized on directed acyclic graphs (Sperduti and Starita, 1997; Frasconi et al., 1998). However, it was in the mid-2010s that GNNs gained significant attention in the machine learning community.

One of the earliest works on GNNs is the paper "Semi-Supervised Classification with Graph Convolutional Networks" by Kipf and Welling in 2016. This paper introduced a simple and effective graph convolutional network architecture, called GCN, for node classification tasks on graph-structured data. Since then, many variants of GCN have been proposed.

- Paper: https://arxiv.org/../1609.02907

- Papers with Code: https://paperswithcode.com/../gcn

Here are some other important architectures and methods that have driven the Graph Machine Learning field forward:

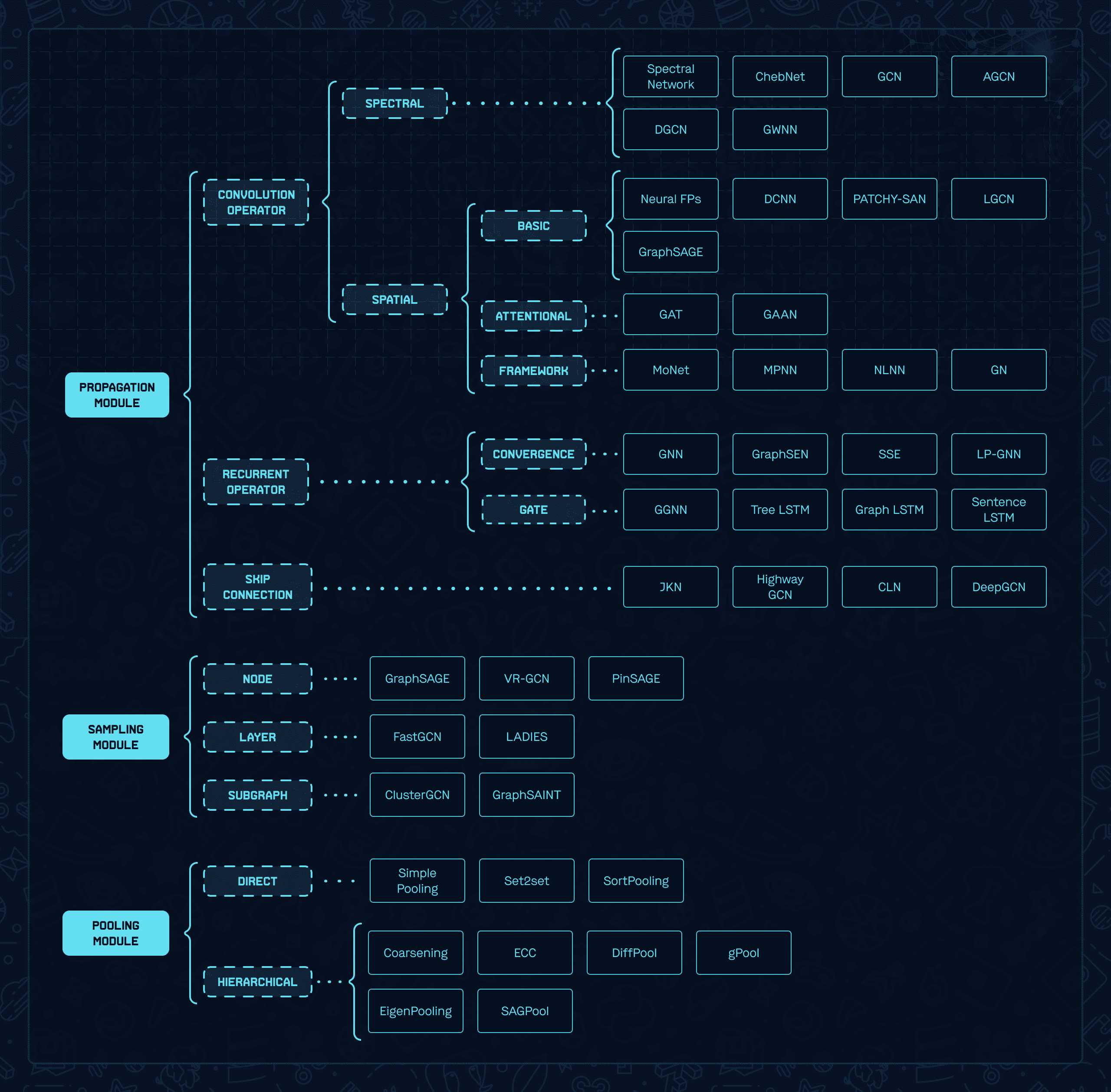

(1) Message Passing Neural Networks (MPNN): the notion of message introduced by Gilmer et al. in Neural Message Passing for Quantum Chemistry in 2017. Since then, MPNN has become a popular method for various graph-related tasks such as predicting molecules' properties, protein-protein interactions, recommender, and transportation systems.

- Abstract: https://arxiv.org/../1704.01212v2

- Papers with Code: https://paperswithcode.com/../mpnn

(2) Graph Attention Networks (GAT): GAT is a type of GNN that uses attention mechanisms to aggregate information from the neighbors of each node in the graph. Attention helps learn the attention coefficients or, more intuitively, the importance coefficients of each node to one another towards solving the optimization objective. It was introduced in the paper "Graph Attention Networks" by Veličković et al. in 2017 and has been applied to various tasks, such as node classification and link prediction.

- Abstract: https://arxiv.org/../1710.10903

- Papers with Code: https://paperswithcode.com/../gat

(3) GraphSAGE: Scalability is a significant challenge faced by many GNN architectures. This is because the feature vector of each node typically relies on information from its entire neighborhood, which can be highly inefficient for large graphs with extensive neighborhoods. To address this issue, the integration of sampling modules has been proposed. The concept behind these modules is that instead of utilizing all the neighborhood information, a smaller subset can be selected for the propagation process. GraphSAGE is one of the first methods addressed to solve this. It was introduced in the paper "Inductive Representation Learning on Large Graphs" by Hamilton et al. in 2017.

- Abstract: https://arxiv.org/../1706.02216v4

- Papers with Code: https://paperswithcode.com/../graphsage

(4) Temporal Graph Networks (TGN): dynamic graphs refer to graphs that change their structure over time, including alterations to both nodes and edges, such as additions, modifications, and deletions. The structure of a dynamic graph can be represented as a sequence of events, each with a timestamp representing the changes made. Applications of dynamic graphs occur everywhere, including social networks, financial transactions, traffic, etc. Rossi et al. introduced TGN as the method of addressing dynamic graphs in the paper "Temporal Graph Networks for Deep Learning on Dynamic Graphs" in 2017.

- Abstract: https://arxiv.org/../2006.10637

- Papers with Code: https://paperswithcode.com/../tgn

GNNs can be seen as part of the broader trend of deep learning, which had a significant impact on the field in recent years.

Fig 1. The landscape of GNNs from a review of methods and applications by Zhou J. et al, 2018.

What industries might use GNNs?

Graph Neural Networks have potential applications in a wide range of industries that include:

- Social media and E-commerce companies model social network structure and predict user behavior, such as user churn or purchase behavior.

- Healthcare companies process molecular structure data, such as protein-protein interaction networks, to predict drug-target interactions and accelerate drug discovery.

- Transportation companies process transportation networks, such as urban road networks, to predict traffic flow and optimize transportation routing.

- Pharmaceutical and Biotech companies use MPNNs to predict the properties of molecules, such as drug efficacy and toxicity, which can help speed up the drug discovery process. Also, they can be used to predict protein-protein interactions, which can help understand the underlying mechanisms of diseases and guide the development of new drugs.

- Finance companies utilize GNNs to process financial networks, such as stock market networks, to predict stock prices and detect financial fraud.

These are just a few examples, and the applications of GNNs are rapidly expanding as researchers and companies continue to explore the potential of these algorithms.

What is graph neural network used for?

GNNs are used for four core tasks:

-

Node / Edge Classification to predict a label for each node or edge in the graph. This task is commonly used in social network analysis, protein-protein interaction analysis, and other graph-structured data applications.

-

Link Prediction to predict the existence of edges between nodes in the graph. This task is commonly used in social network analysis and recommendation systems.

-

Graph Classification to predict a label for the entire graph. This task is commonly used in molecular biology and cheminformatics.

-

Graph Generation to generate new graphs, such as molecular structures or graphs with a particular topology. This task has potential applications in drug discovery and network design.

What companies use GNNs, and for what problems?

Many companies have used Graph Neural Networks (GNNs) across various industries to solve various problems. Here are a few examples:

(1) Pinterest proposed a graph neural network-based recommendation system called PinSage. It is designed to generate high-quality recommendations for users based on the graph structure of their interests, represented as a graph of pins and boards. PinSage uses graph convolutional networks to propagate information from pins to boards and from boards to users, effectively capturing the complex relationships between different interests and recommending highly relevant pins to users.

- Abstract: https://arxiv.org/../1806.01973

- Papers with Code: https://paperswithcode.com/..networks-for-web

- Blog: https://medium.com/..-88795a107f48

(2) Uber uses GNNs to predict its riders' estimated arrival time (ETA). The system leverages GNNs to model the relationships between road segments and traffic conditions, allowing it to capture traffic's impact on the ETA effectively. By combining this information with historical and real-time traffic data, the system can provide more accurate ETAs, improving the overall rider experience and reducing wait times.

- Abstract: https://arxiv.org/../2206.02127

- Papers with Code: https://paperswithcode.com/..-system-at

- Blog: https://www.uber.com/..-predicts-arrival-times/

(3) AlphaFold and AlphaFold 2 are AI-powered protein folding prediction systems developed by DeepMind. AlphaFold uses deep learning techniques, including GNNs, to predict the 3D structure of proteins based on their amino acid sequences. AlphaFold 2, the latest version of the system, has achieved remarkable accuracy and has been able to predict the structure of many proteins with near-atomic accuracy, contributing to a deeper understanding of biology and disease and opening up new avenues for drug discovery and development.

- Abstract (Alpha Fold): https://www.nature.com/..-021-03819-2

- Papers with Code: https://paperswithcode.com/..-prediction

(4) Facebook (Meta) is using GNNs to analyze its data. They’ve recently proposed a significant performance boost by introducing the Correct and Smooth (C&S) technique in the “Combining Label Propagation and Simple Models Out-performs Graph Neural Networks” paper by Huang et al. in 2020.

- Abstract: https://arxiv.org/../2010.13993

- Papers with Code: https://paperswithcode.com/..-simple-models-1

- Blog: https://ai.facebook.com/..-graph-neural-networks/

(5) Amazon uses it for all applications and helps their AWS customers train GNNs at scale. DistDGL is one of their publications. It minimizes overheads associated with distributed computations by using a light-weight min-cut graph partitioning algorithm with multiple balancing constraints and reducing communication by replicating halo nodes and sparse embedding updates. This results in the ability to train high-quality models.

- Abstract: https://arxiv.org/../2010.05337

- Papers with Code: https://paperswithcode.com/..-graph-neural-network

- Blog: https://www.amazonaws.cn/../publications/

(6) Google applies GNNs in many places, from GoogleMaps to Search Optimization. One example of their work on ETA prediction for Google Maps. The work was done by DeepMind and called “ETA Prediction with Graph Neural Networks in Google Maps” by Derrow-Pinion et al. in 2021.

- Abstract: https://arxiv.org/../2108.11482

- Papers with Code: https://paperswithcode.com/..-graph-neural-networks-in

- Blog: https://www.deepmind.com/..-graph-neural-networks

How to get started with GNNs?

Getting started with Graph Neural Networks (GNNs) requires a basic understanding of deep learning and graph theory. Here are some steps you can follow to get started:

(1) Familiarize yourself with graph theory concepts. Before diving into GNNs, it's important to understand graph theory concepts such as nodes, edges, adjacency matrices, degree, and centrality.

(2) Study deep learning basics. GNNs are a type of deep learning algorithm, so a solid understanding of neural networks, backpropagation, and gradient descent is crucial. Our Deep Learning Roadmap would be a good place to start.

(3) Read more articles:

- Thorough overview of best Graph Neural Network architectures from AI Summer: https://theaisummer.com/gnn-architectures/

- Gentle into into GNNs from Distill: https://distill.pub/../gnn-intro

- All you need to know about GNNs from Neptune.ai: https://neptune.ai/..-gnn-applications

(4) Consider looking at online video materials and practical tutorials to familiarize yourself with mathematical concepts and simple implementations to gain some light hands-on experience. We would advise looking into some content from:

- Stanford's CS224w. Here is the lectures playlist: https://www.youtube.com/..kDn

- Another more practical playlist is done by Aleksa Gordić: https://www.youtube.com/..HF5

(5) Read introductory GNN papers linked and referenced in this post above. Reading these papers will give you a good understanding of the basics of GNNs and how they work.

(6) Familiarize yourself with some of the frameworks and implementation standards for GNN. Here are some tutorials to help you on that matter:

- For TensorFlow: https://fidel-schaposnik.github.io/../tfgnn-intro/

- For PyTorch (PyTorch Geometric): https://pytorch-geometric.readthedocs.io/..gnn.html

- For DGL: https://docs.dgl.ai/

- PyTorch Geometric for Social Networks data: https://www.kaggle.com/..-social-networks

(7) Experiment with GNNs on simple problems. Start implementing simple GNN models on toy problems, such as node and graph classification. This will help you get a feel for how GNNs work and how to train and fine-tune them.

(8) Move on to more complex problems. Once you understand GNNs, you can move on to more complex problems, such as graph generation, link prediction, and graph attention networks.

Conclusion

In recent years, Graph Neural Networks (GNNs) have emerged as a robust and practical solution for problems that can be represented as graphs. This article provides a high-level overview of GNNs and highlights the various applications of GNNs.

Thanks for reading!