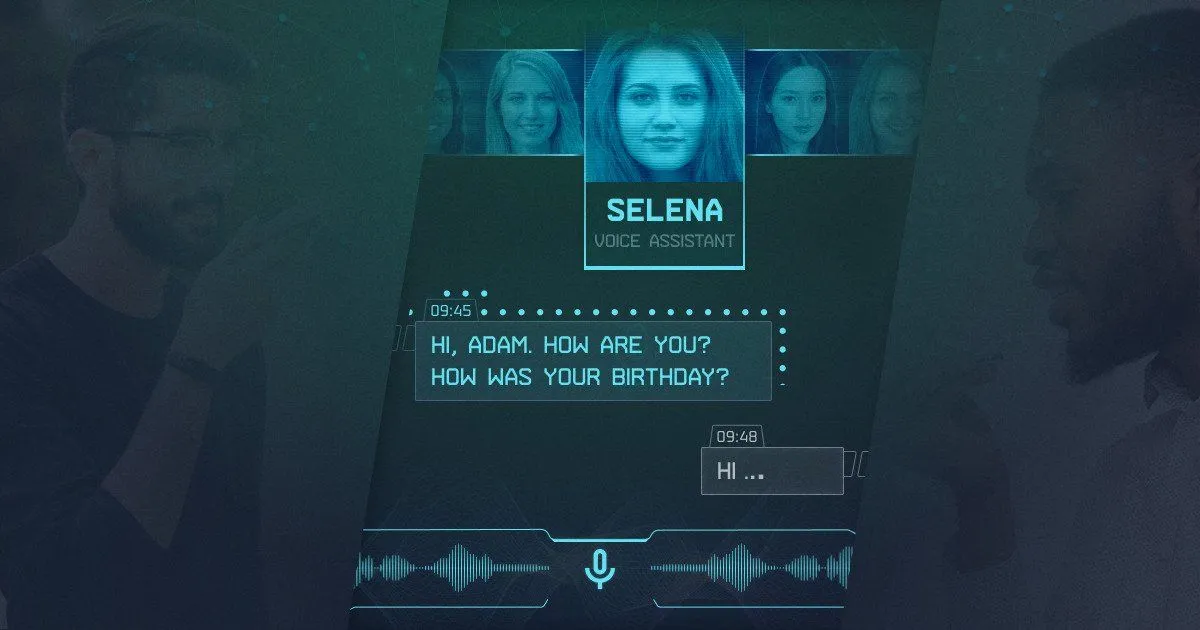

Empathetic & Intelligent Virtual Assistant

Talk to her and stop being lonely.

11 Jun 2020

4 min read

Client Services

Industries

Virtual Assistant

Summary

- Millions of people, especially in Asia, suffer from loneliness.

- Our client develops a dialog agent to be a user's friend.

- Dialog agent is capable to remember all the user information and detect emotions in the user's voice as well as the content from the user.

- DRL used advanced NLP technologies to build an end-to-end Dialog Agent which can be installed to Alexa or Google device (currently available as a closed beta).

Tech Stack

AWS

GCP

PyTorch

Python

React Native

TensorFlow

Scala

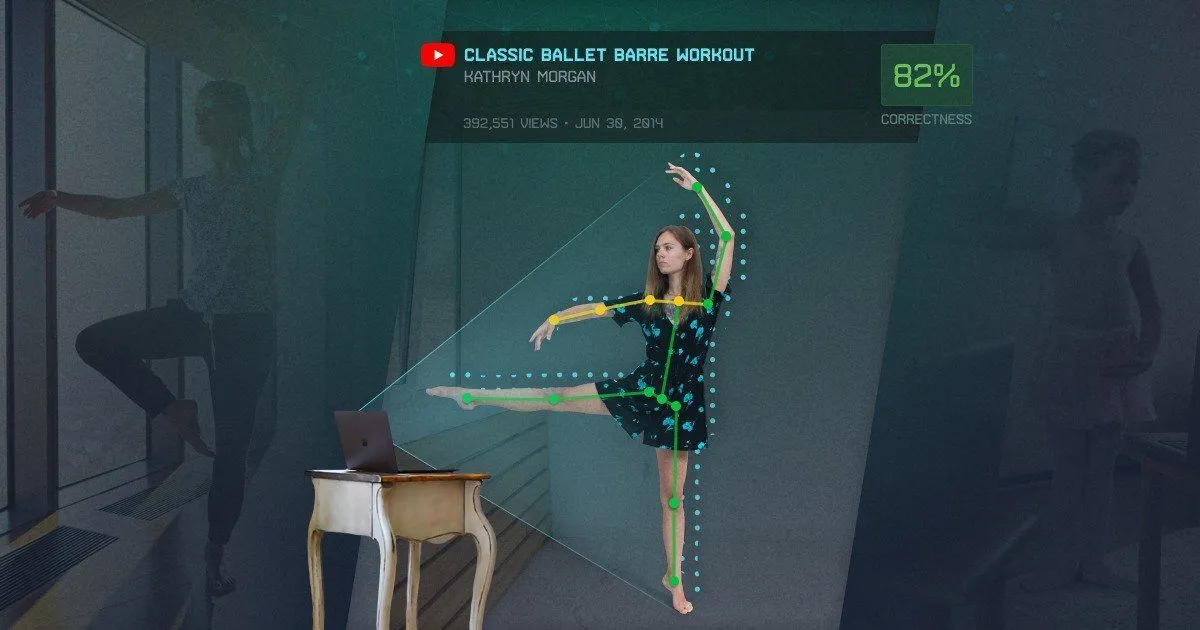

Next Case

CV-Powered Personal Coach Platform for Amateur Athletes

AI-Powered approach in dance education.

Tech Challenge

- System has to be able to speak with different known voices, such as Scarlett Johansson.

- Voice has to be generated with 15 different emotions and intensity levels.

- Voice has to be generated in less than 1/100 of the second independently of audio length.

- The system is able to chat with the user, have some common sense on things, know and remember a lot of different information, be disconnected from the internet (we don’t want to create one more Google Assistant).

- System should be able to pass the Turing Test (Imitation game).

Solution

- For the voice, seq2seq with attention architecture was used, called tacotron2. It was implemented almost from scratch, by that time there weren't good implementations.

- For voice emotion control GST tokens were applied, which utilizes multi-head self-attention mechanism.

- For faster than real-time inference WaveGlow post-processor was used, which is basically parallelized version of WaveNet.

- For the best possible quality of voice more data was gathered from voice actors. Special technical task was created to reach the best possible quality.

- For the dialog system, Blender + Large Attention + PPLM is used with custom conditioning mechanism, which gives us control over different Dialogue Acts, Emotions, Topics, Contexts and Tasks (Q&A, chit-chatting, generation).

- The consistency problem is solved by parsing all the utterances with SOA and NER mechanisms and updating the database. The best possible database of knowledge about the user is Neo4j. We storing the data as a graph.

- In order to solve the dialog management problem, we apply DDPG, Rainbow and A3C + Imagination & Curiosity blocks with Dialogue Acts, Emotions, Topics, Contexts and Tasks as an action space (Reinforcement Learning).

- In order to solve Reinforcement Learning the WebSocket interface was created for Amazon Mechanical Turk to receive rewards for dialog from users.

- In order to gather good quality dialogs we randomly merge users to chat & rate each other without them knowing they speak with bot or user.

Impact

- Project is in the active development phase, and currently is purely technical without any business part.

Have an idea? Let's discuss!

Book a meeting

Yuliya Sychikova

COO @ DataRoot Labs

Do you have questions related to your AI-Powered project?

Talk to Yuliya. She will make sure that all is covered. Don't waste time on googling - get all answers from relevant expert in under one hour.

Talk to Yuliya. She will make sure that all is covered. Don't waste time on googling - get all answers from relevant expert in under one hour.

OR

Important copyright notice © DataRoot Labs and datarootlabs.com, 2026. Unauthorized use and/or duplication of this material without express and written permission from this site’s author and/or owner is strictly prohibited. Excerpts and links may be used, provided that full and clear credit is given to DataRoot Labs and datarootlabs.com with appropriate and specific direction to the original content.