Machine Learning Techniques in Eye Tracking Apps

Track human reading, evaluate speed, distinguish reading gaze movements from other activities, and annotate hard-to-read places.

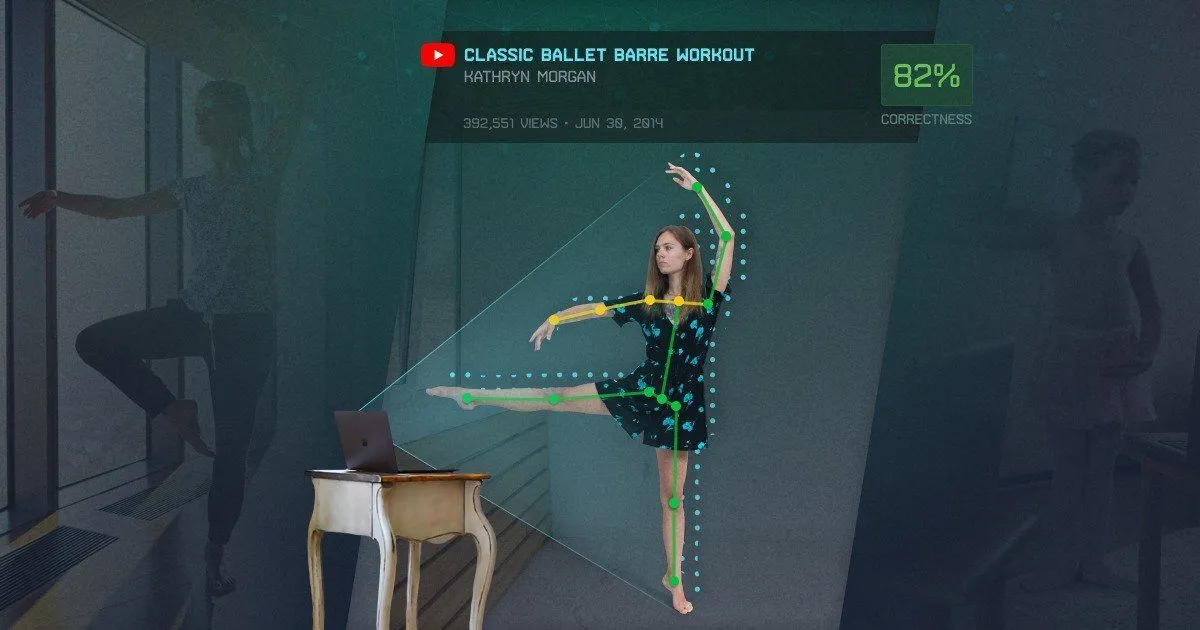

As one of the primary suppliers of AI and machine learning consulting on the local market, our squad partnered with Beehiveor R&D Labs for a joint research project on a reading assistant system (RAS). We were inspired by different fitness apps and wrist trackers, which use accelerometers, heart rate sensors, and GPS data among other things to help people do physical activities better. Similarly, if we can track gaze movement, why not to use this data to facilitate visual activities. Reading was chosen as a primary research objective since it’s one of the activities humans perform daily.

There are certain problems people encounter while reading, and everyone resolves those problems in a unique way: rereading difficult parts of a text, googling unknown words, writing down details to remember. What if this could be automated? For instance, to track human reading, evaluate speed, distinguish reading gaze movements from other activities, and annotate hard-to-read places. These are features that can be extremely useful for people who have to tackle huge amounts of text every day.

The definition

Before we get into the research process and findings, let’s figure out what Reading Assistant System (RAS) is and why it is so important. RAS is defined as an AI and gaze tracking-based system for various reading analysis purposes. RAS can be used in many settings, and the modern application is well documented in education, medicine, HR, marketing and other areas.

The system will allow the user to:

- Track and store all reading materials and related reading pattern metadata.

- Search and filter content by reading pattern parameters (e.g. time and rate of fixation allow to highlight only the area of interest inside the text).

- Get various post-processing analytical annotations.

- Get real-time assistance options during reading (e.g. automated translation).

Here’s how the technology will behave in practice. The RAS program can automatically track movement of your gaze and match it with the text on the screen. It will allow to process reading patterns in real-time and store all the metadata for further analysis.

Thanks to deep learning-trained neural networks, a RAS can identify with up to 96% accuracy whether the person is reading at a given moment.

The hypothesis

The initial purpose of our research was tri-fold:

- To check a high accuracy hypothesis.

- To create a real-time model.

- Further research the gaze patterns.

Our team of 3 people carried out research activities over 2 months in the scope of DataRoot University activities. The primary task was to create a program for human visual activity analysis by gaze movement that would incorporate:

- Reading/non-reading classification.

- Regression/sweeps/saccades detection.

- Gaze to text mapping and point of interest detection.

The execution

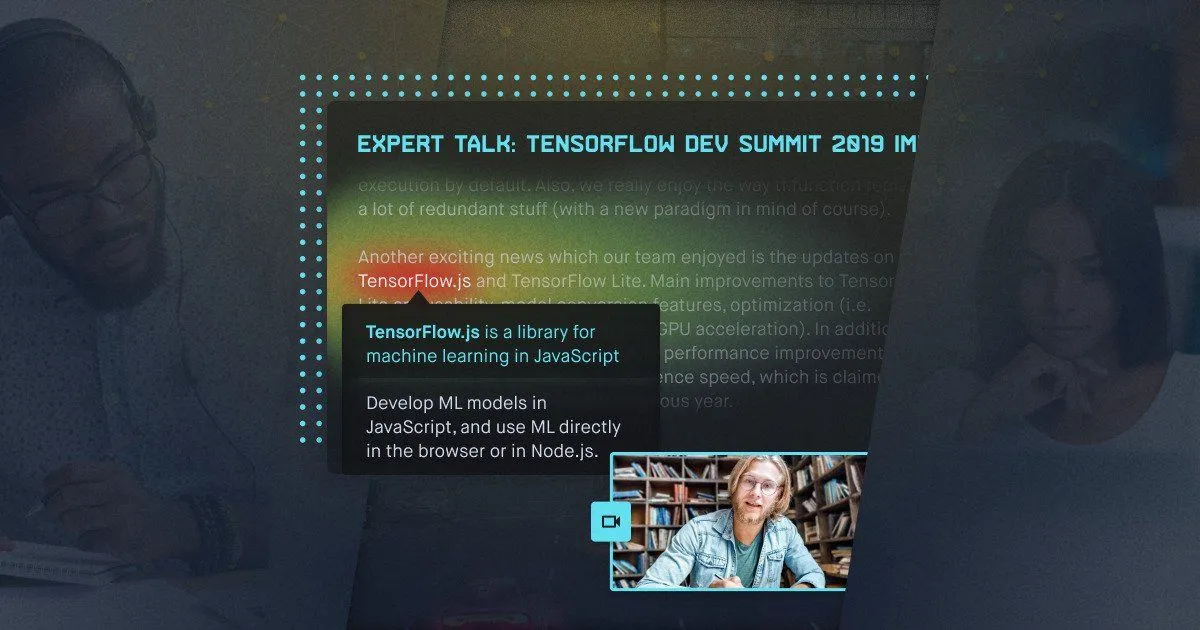

We used mainly open-source libraries based on Python:

- DS basics: Numpy, Scipy, Matplotlib, Seaborn, Plotly, Pandas (data preparation, manipulation, visualization); statsmodels (time-series research).

- ML/DL: Scikit-learn (clustering algorithms, time-series research), Tensorflow, Keras (CNN, LSTM).

- Computer vision: OpenCV, imutils, PIL, Tesseract (text recognition, eye movement to text mapping).

The algorithms that we used:

- Time series analysis and feature extraction + MLP

- CNN

- LSTM

- K-Means

- Panorama stitching

- OCR with tesseract

Have an idea? Let's discuss!

Talk to Yuliya. She will make sure that all is covered. Don't waste time on googling - get all answers from relevant expert in under one hour.