Accelerating Reinforcement Learning: The AgileRL Vision

Co-Founder and CTO Nicholas Ustaran-Anderegg on Breaking Barriers, Accelerating AI, and the Future of RLOps.

Nicholas Ustaran-Anderegg is the CTO and Co-Founder at AgileRL, a UK-based innovative firm dedicated to empowering the development of superhuman-level artificial intelligence systems. Established with CEO Param Kumar, AgileRL is committed to accelerating and democratizing access to reinforcement learning. Their open-source framework speeds up training and hyperparameter tuning by a factor of 10 over top RL libraries. Built on top of this framework, AgileRL's commercial-grade RLOps solution optimizes the whole reinforcement learning workflow, including simulation, training, deployment, and monitoring. With Nicholas leading the technical vision, AgileRL is reducing entry barriers to reinforcement learning, enabling companies to unlock sophisticated AI with unheralded efficiency and performance.

Founded in 2023, AgileRL raised $2M in a Pre-Seed round, led by Counterview Capital, Octopus Ventures, and Entrepreneur First.

What motivated you to found AgileRL, and how do you believe it will revolutionize the AI space?

My co-founder Param and I founded AgileRL after recognizing an opportunity to fundamentally transform reinforcement learning. Working in the field, we experienced first-hand how the technical complexity and resource requirements created a significant barrier to innovation, limiting both who could use RL and how far they could push the technology.

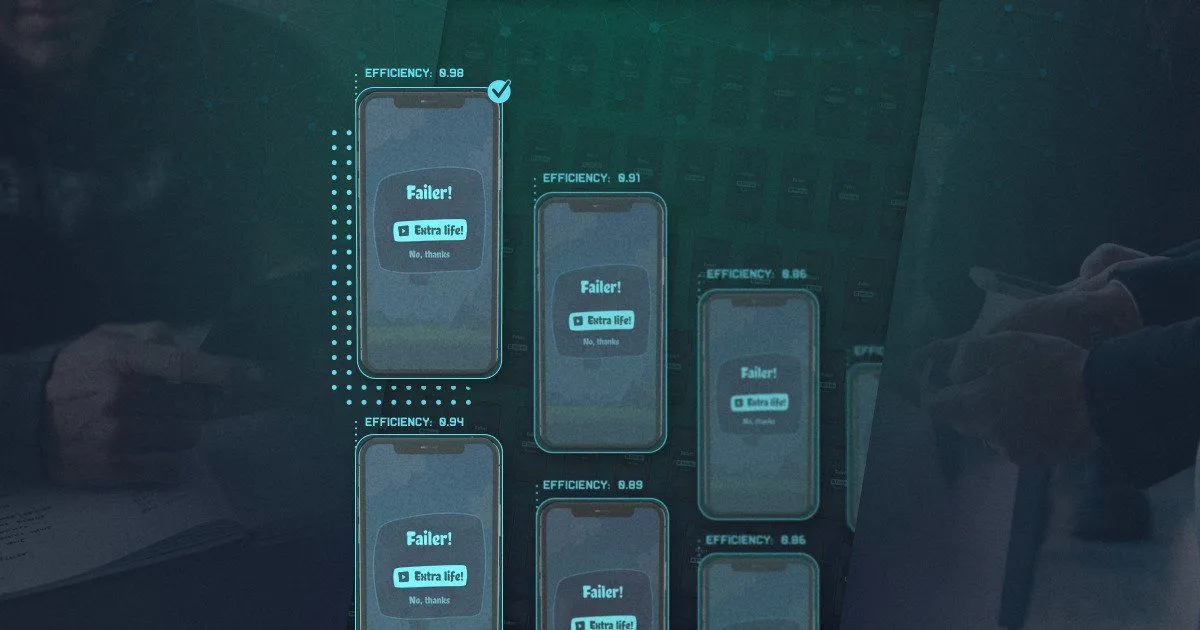

Our platform, Arena, addresses this challenge by optimizing the entire RL pipeline, reducing training time and computational costs by a factor of 10. This efficiency breakthrough serves a unified purpose: enabling the development of increasingly sophisticated, superhuman AI systems while making this capability accessible to organizations of all sizes.

What truly revolutionizes the AI space is this amplification effect. By removing technical bottlenecks, we're accelerating the advancement of reinforcement learning itself, allowing developers to create and iterate on more complex agents that achieve unprecedented performance. Simultaneously, we're expanding who can participate in this innovation, bringing powerful RL capabilities within reach of companies that previously couldn't justify the investment.

The result is an accelerated path to a future where highly performant, adaptive AI can solve previously intractable problems across every industry - not just in research labs or tech giants, but wherever these solutions create value.

Your open-source platform guarantees 10x training speed and hyperparameter tuning improvements compared to leading RL libraries. Can you tell us more about the key tech innovations that make that possible?

Our 10x performance improvement stems from several integrated technical innovations that fundamentally reimagine reinforcement learning infrastructure. We've pioneered evolutionary hyperparameter optimization that delivers automatic, optimal performance in a single training run. Our population-based training methods evolve both hyperparameters and neural network architectures simultaneously while sharing learning across populations. This eliminates the traditional trial-and-error approach to hyperparameter tuning and enables significantly improved performance through adaptation to the learning environment. Our framework supports the complete spectrum of reinforcement learning paradigms: on-policy, off-policy, offline, multi-agent, and contextual multi-armed bandits - all with the same performance advantages. From my machine learning experience, I know how important flexibility is for building the best solution, and so we have baked this into the AgileRL framework from the start. Users can choose any neural network architecture, register hyperparameters for mutations, and have complete control over their system. This versatility means teams can apply our optimizations regardless of their specific RL approach. For complex environments, we've also developed the AgileRL Skills wrapper that enables hierarchical learning. This allows agents to break down challenging tasks into smaller, learnable sub-tasks, dramatically improving both learning efficiency and final performance on previously intractable problems. Finally, our memory management system is specifically engineered for RL workloads, optimizing how experience replay buffers are stored and accessed to minimize computational overhead during training. We also enable the sharing of experiences between populations of agents, meaning each agent only has to collect a fraction of the number of samples during training. These innovations work together as an integrated system where each component amplifies the others, creating a platform that doesn't just incrementally improve performance but fundamentally expands what's possible with reinforcement learning.

AgileRL's Arena solution streamlines end-to-end development, across the RL pipeline, from simulation through training to deployment and monitoring. What were some of the challenges you experienced in creating such an integrated product, and how did you address them?

Creating Arena's end-to-end RL pipeline required overcoming several critical challenges, with training speed, seamless deployment, and amazing user experience being our most ambitious targets.

The greatest technical obstacle we overcame was optimizing every aspect of the training pipeline to achieve unprecedented RL training speeds. While our open-source framework addresses the algorithmic fundamentals, Arena delivers the robust engineering infrastructure required to transform research-grade RL into production-ready systems. We completely redesigned the training architecture with custom distributed computing patterns and memory management systems that minimize communication overhead while maximizing parallelization. The result is extraordinarily fast training speeds, enabling experiments that once took weeks to complete in mere hours.

Environment compatibility was another challenge we solved by developing a flexible simulation interface with built-in validation. This works seamlessly across domains while preserving our lightning-fast learning capabilities, and ensures environments run correctly before committing valuable compute resources. The deployment also presented significant challenges.

Traditional RL solutions require extensive engineering work to move from trained models to production systems. We developed a one-click deployment system that automatically handles the complex infrastructure requirements of reinforcement learning agents. This includes containerization, API creation, and scaling capabilities that ensure agents perform consistently regardless of deployment environment.

One more important and challenging aspect was balancing ease of use with flexibility. We wanted to create a system accessible to organizations just starting with RL while still providing depth for advanced users. Our solution was to implement a layered architecture, with intuitive high-level interfaces supported by more granular controls for those who need them. This approach ensures that Arena grows with our users as their RL expertise develops.

Throughout development, close collaboration with early users ensured Arena addressed real-world RL implementation challenges while delivering on our core promise: making sophisticated reinforcement learning ridiculously fast to train, effortless to deploy, and accessible to teams regardless of their prior RL experience.

AgileRL raised over $2M in a pre-seed round in August 2023. How has this funding accelerated your business growth and product development?

The pre-seed funding has been transformative for AgileRL. First, it allowed us to expand our core technical team, bringing on specialized talent in reinforcement learning optimization, distributed systems, and DevOps. This acceleration in hiring has directly translated to faster development cycles and more comprehensive feature implementation.

We've also been able to significantly invest in our infrastructure, building out robust cloud deployment capabilities and testing environments that enable us to validate our platform across a wider range of use cases. This has been crucial for ensuring the reliability and performance our customers expect.

The funding has supported our open-source community growth initiatives, including developer advocacy, documentation improvements, and community events. The open-source platform is a fundamental part of our strategy, and these investments have helped us build a vibrant community around our technology.

On the commercial side, we've been able to accelerate our go-to-market strategy and engage with enterprise customers more effectively. We have already successfully onboarded several partners in different industries and can provide the support they need when implementing our technology.

Perhaps most importantly, the funding has given us the runway to be ambitious in our vision. Rather than focusing on short-term compromises, we've been able to invest in solving the fundamental challenges in reinforcement learning that will deliver long-term value to our users and customers.

AgileRL specializes in RLOps — MLOps tailored for reinforcement learning. Why do you believe RLOps is so critical for the future of AI, and how does AgileRL address this requirement?

RLOps is critical for the future of AI because reinforcement learning represents the frontier of AI capabilities – systems that can learn, adapt, and make decisions in complex, dynamic environments. However, without robust operational infrastructure, these advanced capabilities remain academic curiosities rather than practical business solutions.

Traditional MLOps tools fall short of reinforcement learning for several reasons. RL systems have unique requirements around simulation environments, interactive data collection, and continuous adaptation that aren't addressed by conventional MLOps. Additionally, RL introduces complexities around safety, stability, and reproducibility that require specialized approaches.

At AgileRL, we've built our RLOps solution specifically to address these challenges and gain extra performance by designing for RL from the start. Our platform handles the unique simulation requirements of RL, managing the complex interplay between agent and environment during both training and deployment. We've implemented specialized monitoring systems that track not just model performance but also environment dynamics and agent behavior over time.

Our approach to RLOps also emphasizes reproducibility and version control across the entire RL pipeline – from environment configuration to agent policies to deployment parameters. This is essential for auditing, debugging, and iterative improvement of RL systems.

Crucially, we've designed our RLOps tools to be accessible to organizations that may have ML expertise but are new to reinforcement learning. By abstracting away much of the operational complexity, we enable companies to focus on their domain-specific challenges rather than the infrastructure needed to support RL.

As AI systems become more autonomous and interactive, RLOps will only grow in importance. We're building AgileRL to be the foundation that supports this new generation of intelligent systems.

The mission of AgileRL includes making RL accessible, despite it being traditionally resource-intensive. What are you doing to make this a reality?

At AgileRL, we're transforming reinforcement learning through a focus on exceptional performance and genuine accessibility. Our strategy has several key components:

Our advanced optimization techniques deliver unprecedented computational efficiency. The 10x speedup we've achieved means individuals can accomplish with a single GPU what previously required an entire cluster and a team of RL engineers. This not only makes RL feasible within normal infrastructure budgets but also enables more ambitious agent designs and faster iteration cycles for pushing performance boundaries.

Our suite of pre-built components accelerates development without compromising performance. From environment wrappers to optimized policy architectures, to deployment patterns, these building blocks allow developers to focus on their domain-specific challenges while leveraging our performance-tuned implementations.

We've developed comprehensive educational resources and documentation that build both practical knowledge and theoretical understanding. Through tutorials, case studies, and direct support, we're helping organizations develop the expertise needed to create truly exceptional RL systems.

Finally, our strategic approach to open-source provides core functionality to everyone while offering advanced commercial features for production deployments. This ensures anyone can start with RL, while organizations with ambitious performance requirements can seamlessly scale up as their needs grow.

Together, these initiatives are making high-performance reinforcement learning accessible to a much broader range of organizations, accelerating both the adoption and advancement of this transformative technology.

Reflecting on your journey with AgileRL since January 2023 when it was founded, what have been the key milestones, and what are your aspirations for the future of the company?

Reflecting on our journey since founding AgileRL in January 2023, several key milestones stand out. The initial release of our open-source library was a defining moment, as we saw immediate traction and validation of our technical approach. The reaction from the community and their willingness to engage with us confirmed the market need for better RL tools.

Securing our pre-seed funding of $2M was crucial, giving us the resources to expand our team and accelerate development. Building our core team of RL specialists, each bringing unique expertise, has been another highlight – the talent we've assembled has been fundamental to our success.

The launch of our commercial Arena platform represented the evolution from an open-source library to a comprehensive RLOps solution. Seeing our first enterprise partners successfully deploy RL applications using our platform – some in domains where RL was previously considered too complex or expensive – has been particularly rewarding.

Looking to the future, our aspirations are ambitious. We aim to establish AgileRL as the definitive platform for reinforcement learning operations, similar to how tools like Databricks and Snowflake have become standards in their domains. We want to enable a thousand new applications of reinforcement learning across industries by making the technology accessible to organizations of all sizes.

We see enormous potential in expanding our platform to support emerging hybrid approaches that combine reinforcement learning with large language models and other AI techniques to build AI agents. These hybrid systems represent the future of AI, and we're positioning AgileRL as the infrastructure that enables their development and deployment.

Ultimately, we aspire to fundamentally change how intelligent systems are built. By making reinforcement learning accessible and operationally viable, we believe we can accelerate the development of truly adaptive, autonomous AI that can tackle some of the world's most complex challenges.